Meta has built an AI supercomputer it says will be world’s fastest by end of 2022

Social media conglomerate Meta is the latest tech company to build an “AI supercomputer” — a high-speed computer designed specifically to train machine learning systems. The company says its new AI Research SuperCluster, or RSC, is already among the fastest machines of its type and, when complete in mid-2022, will be the world’s fastest.

“Meta has developed what we believe is the world’s fastest AI supercomputer,” said Meta CEO Mark Zuckerberg in a statement. “We’re calling it RSC for AI Research SuperCluster and it’ll be complete later this year.”

The news demonstrates the absolute centrality of AI research to companies like Meta. Rivals like Microsoft and Nvidia have already announced their own “AI supercomputers,” which are slightly different from what we think of as regular supercomputers. RSC will be used to train a range of systems across Meta’s businesses: from content moderation algorithms used to detect hate speech on Facebook and Instagram to augmented reality features that will one day be available in the company’s future AR hardware. And, yes, Meta says RSC will be used to design experiences for the metaverse — the company’s insistent branding for an interconnected series of virtual spaces, from offices to online arenas.

“RSC will help Meta’s AI researchers build new and better AI models that can learn from trillions of examples; work across hundreds of different languages; seamlessly analyze text, images, and video together; develop new augmented reality tools; and much more,” write Meta engineers Kevin Lee and Shubho Sengupta in a blog post outlining the news.

“We hope RSC will help us build entirely new AI systems that can, for example, power real-time voice translations to large groups of people, each speaking a different language, so they can seamlessly collaborate on a research project or play an AR game together.”

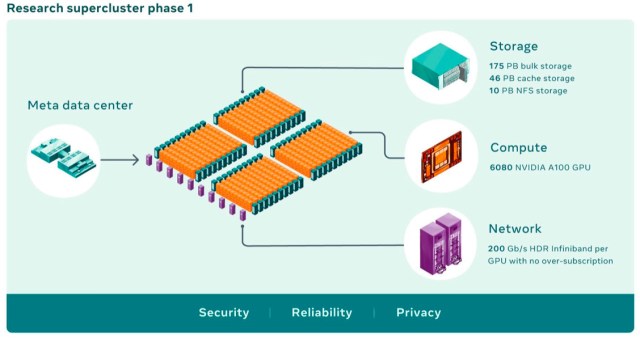

Work on RSC began a year and a half ago, with Meta’s engineers designing the machine’s various systems — cooling, power, networking, and cabling — entirely from scratch. Phase one of RSC is already up and running and consists of 760 Nvidia GGX A100 systems containing 6,080 connected GPUs (a type of processor that’s particularly good at tackling machine learning problems). Meta says it’s already providing up to 20 times improved performance on its standard machine vision research tasks.

Before the end of 2022, though, phase two of RSC will be complete. At that point, it’ll contain some 16,000 total GPUs and will be able to train AI systems “with more than a trillion parameters on data sets as large as an exabyte.” (This raw number of GPUs only provides a narrow metric for a system’s overall performance, but, for comparison’s sake, Microsoft’s AI supercomputer built with research lab OpenAI is built from 10,000 GPUs.)

These numbers are all very impressive, but they do invite the question: what is an AI supercomputer anyway? And how does it compare to what we usually think of as supercomputers — vast machines deployed by universities and governments to crunch numbers in complex domains like space, nuclear physics, and climate change?

The two types of systems, known as high-performance computers or HPCs, are certainly more similar than they are different. Both are closer to datacenters than individual computers in size and appearance and rely on large numbers of interconnected processors to exchange data at blisteringly fast speeds. But there are key differences between the two, as HPC analyst Bob Sorensen of Hyperion Research explains to The Verge. “AI-based HPCs live in a somewhat different world than their traditional HPC counterparts,” says Sorensen, and the big distinction is all about accuracy.

The brief explanation is that machine learning requires less accuracy than the tasks put to traditional supercomputers, and so “AI supercomputers” (a bit of recent branding) can carry out more calculations per second than their regular brethren using the same hardware. That means when Meta says it’s built the “world’s fastest AI supercomputer,” it’s not necessarily a direct comparison to the supercomputers you often see in the news (rankings of which are compiled by the independent Top500.org and published twice a year).

To explain this a little more, you need to know that both supercomputers and AI supercomputers make calculations using what is known as floating-point arithmetic — a mathematical shorthand that’s extremely useful for making calculations using very large and very small numbers (the “floating point” in question is the decimal point, which “floats” between significant figures). The degree of accuracy deployed in floating-point calculations can be adjusted based on different formats, and the speed of most supercomputers is calculated using what are known as 64-bit floating-point operations per second, or FLOPs. However, because AI calculations require less accuracy, AI supercomputers are often measured in 32-bit or even 16-bit FLOPs. That’s why comparing the two types of systems is not necessarily apples to apples, though this caveat doesn’t diminish the incredible power and capacity of AI supercomputers.

Sorensen offers one extra word of caution, too. As is often the case with the “speeds and feeds” approach to assessing hardware, vaunted top speeds are not always representative. “HPC vendors typically quote performance numbers that indicate the absolute fastest their machine can run. We call that the theoretical peak performance,” says Sorensen. “However, the real measure of a good system design is one that can run fast on the jobs they are designed to do. Indeed, it is not uncommon for some HPCs to achieve less than 25 percent of their so-called peak performance when running real-world applications.”

In other words: the true utility of supercomputers is to be found in the work they do, not their theoretical peak performance. For Meta, that work means building moderation systems at a time when trust in the company is at an all-time low and means creating a new computing platform — whether based on augmented reality glasses or the metaverse — that it can dominate in the face of rivals like Google, Microsoft, and Apple. An AI supercomputer offers the company raw power, but Meta still needs to find the winning strategy on its own.

Social media conglomerate Meta is the latest tech company to build an “AI supercomputer” — a high-speed computer designed specifically to train machine learning systems. The company says its new AI Research SuperCluster, or RSC, is already among the fastest machines of its type and, when complete in mid-2022, will…

Recent Posts

Archives

- February 2025

- January 2025

- December 2024

- November 2024

- October 2024

- September 2024

- August 2024

- July 2024

- June 2024

- May 2024

- April 2024

- March 2024

- February 2024

- January 2024

- December 2023

- November 2023

- October 2023

- September 2023

- August 2023

- July 2023

- June 2023

- May 2023

- April 2023

- March 2023

- February 2023

- January 2023

- December 2022

- November 2022

- October 2022

- September 2022

- August 2022

- July 2022

- June 2022

- May 2022

- April 2022

- March 2022

- February 2022

- January 2022

- December 2021

- November 2021

- October 2021

- September 2021

- August 2021

- July 2021

- June 2021

- May 2021

- April 2021

- March 2021

- February 2021

- January 2021

- December 2020

- November 2020

- October 2020

- September 2020

- August 2020

- July 2020

- June 2020

- May 2020

- April 2020

- March 2020

- February 2020

- January 2020

- December 2019

- November 2019

- September 2018

- October 2017

- December 2011

- August 2010